The A/B test component can send out a maximum of seven different versions of an email to the selected contacts. For example, you could test different subject lines or call-to-action buttons in different versions of an email to evaluate which one gets the best response from your contacts.

The email with the best response is used in the journey to target the remaining contacts. The best response may be based on the view rate, the click through rate, the revenue (Webtracker) or the order count (Webtracker). The length of the A/B test period determines when the best version of the email will be sent.

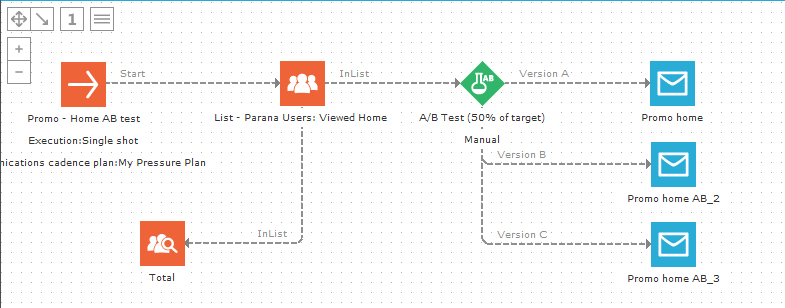

Typically the A/B test component is located just after an Audience list component and before the email message components.

NOTE: A/B test components can also be placed just after a control group. In this case the A/B test is performed on only a segment from the initial number of contacts, based on the control group selected in the journey

Example:

This is an example of a journey in which three versions of an email

are sent out:

Events

The A/B test component generates one event per version of email that has to be sent. Each event points to a different version of the email.

Properties

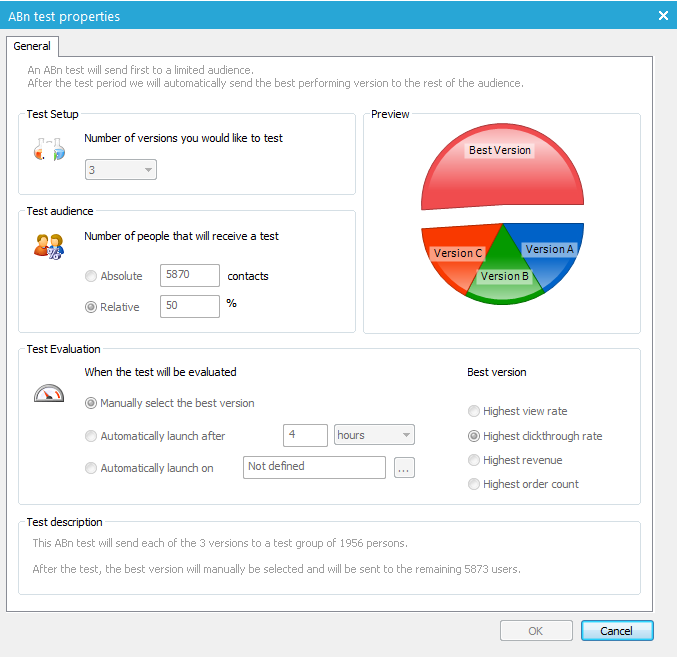

1. Select the Number of versions you would like to test. A maximum of seven are allowed.

2. Next, define the Test audience. This can either be an absolute number of contacts in the journey's audience or a relative percentage of that audience.

3. Define how the evaluation of the emails should be done. Following options are available:

- Manually select the best version — This is the best-performing method of evaluating the results. When launching the journey, first you launch the test. Next, you check the test results and decide which email is the best version. Then you manually launch the journey again to send out the best version.

- Automatically after — This evaluation method starts sending the best version of the email to the remaining contacts in the audience after a user-specified period of time.

- Automatically launch on — This method starts sending the best version of the email to the remaining contacts in the audience at a user-specified moment in time.

NOTE: When sending the best version automatically, make sure there are enough views, clicks, etc. to make an informed decision. Sending the best version after 10 minutes or even an hour might not be a good idea. If only one contact clicked an email, that would show as the best version when it might not be.

4. Define how the best version is evaluated. Following options are available:

- Highest view rate — The email most viewed

- Highest clickthrough rate: — Email most clicked

- Highest revenue — Linked to webtracking

- Highest order count — Linked to webtracking

NOTE on Webtrack:

When a contact clicks in a Selligent email and continues to your website,

you can send data back to Selligent from that website. By using Webtrack

you can measure the conversion from your email to your website. You can

evaluate if the email resulted in a purchase, or measure 'revenue' or

'order count' through Webtrack's shopping tracker. See Webtrack

for more information.

At the bottom of the screen, a Test description is available of the A/B test component.

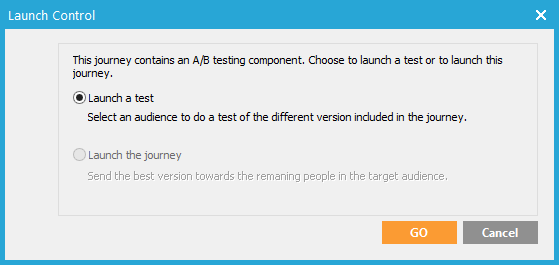

Launching an A/B test journey

When an A/B test journey is launched the first time, a dialog appears providing a choice between 'Launch a test' or 'Launch the journey':

NOTE: When the best version is selected manually, it is only used when the A/B Test component properties are set to 'Manual'. If 'Automatically' is selected instead, the system calculates the best version automatically and uses that version to launch the journey automatically a second time. When 'Automatically' is selected in the AB component's properties, you only need to select 'Launch the test.'

Leave the option 'Launch a test' selected and press the 'GO' button to send out the different email versions to a part of the target audience.

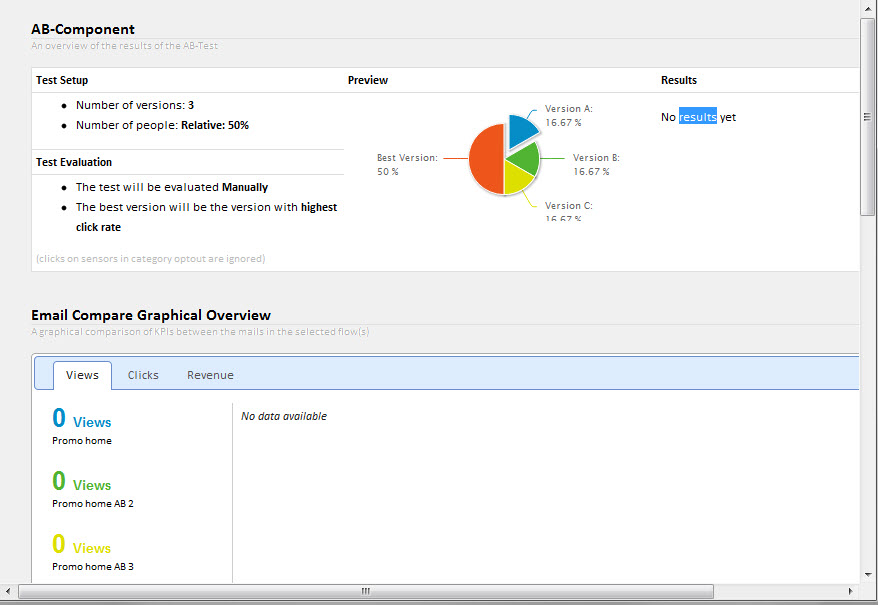

After sending out the test emails, right-click the A/B test component and select 'Test results' to review the test results.

The different email versions are listed. For each email version, information is available on:

- The number of emails viewed

- The number of emails clicked

- The revenue.

The lower part of the dialog supplies statistical information on the delivery of these emails. This information can be used to manually define the winner.